Adaptor Generic (version 1)

The Connector's Generic adaptor provides the ability to import and export data from several different places where data can be stored. This adaptor can be used when no adaptor exists that integrates into a specific business system, or a business system's adaptor does not support a specific data import or export.

Prerequisites

Please make sure you have read through the Get Started/Overview before continuing down this document.

Also make sure you understand the concepts of:

- Connector Adaptors.

- Connector Routines.

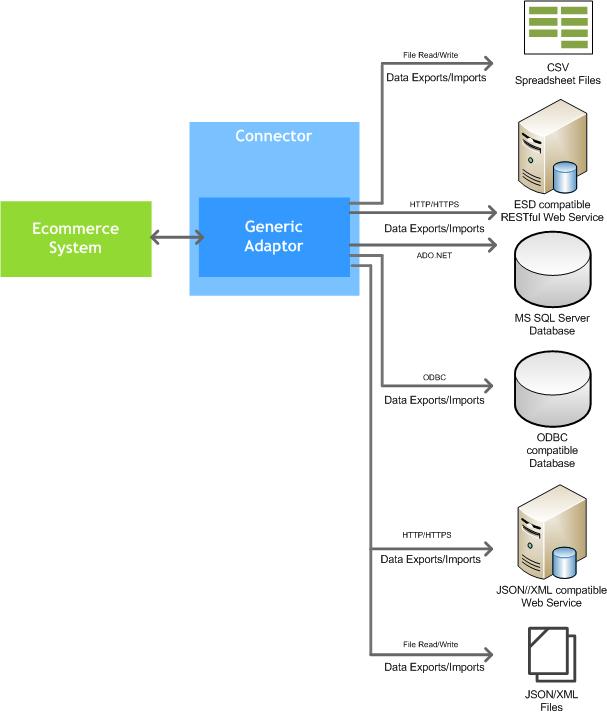

Overview of Data Connectivity

The generic adaptor provides five different ways to export and import from different data sources. The supported data source types are:

- CSV Spreadsheet Files

- Web Services that support Ecommerce Standards Documents

- MS SQL Server Databases

- ODBC Compatible Databases

- XML, JSON and CSV files stored locally, or from HTTP web services, or in the Connector itself

- SQLite Databases (version 3)

Within the Generic adaptor multiple data sources can be set up that each export or import data for a given data source type. For example a data source could be set up that reads product data from a spreadsheet file that is saved in the CSV file format and exports the product data to an Ecommerce system.

An unlimited amount of data sources can be created within the Generic adaptor. Each data export and data import within the adaptor can then be assigned to one of the data sources set up. This allows different data to be obtained from the different data sources. For example the product data may be obtained from the CSV spreadsheet file, where as the customer account data may be obtained from a MS SQL Server database table.

Data Source Type: JSON/XML/CSV - Webservice/File

Within the Generic adaptor data sources can be set to read and write JSON, XML or CSV text data from a compatible web service, from files stored locally on a computer, or from data stored directly in the adaptor. This allows cloud based platforms and business systems that support querying JSON, XML or CSV data over HTTP to have data read from or pushed into by the Connector. Additionally the "JSON/XML/CSV - Webservice /File" data source type can read from JSON, XML or CSV files saved to the filesystem that the Connector is installed on.

Since JSON and XML data structures are hierarchical based, the data source type can be configured to read through the data by matching on objects that meet certain criteria within the tree, these objects then act like table rows where JSON or XML data can be extracted out from each found object's data values, or child objects, or parent objects. SQL-like functions can be used to manipulate and combine JSON or XML data for each data field in any given data export, which allows for highly customised querying of data in a relational database like fashion. CSV text data can also be converted into a JSON data structure, allowing the same advanced querying capabilities as with the JSON and XML data structures.

If XML data is being queried, then it will be first turned into a JSON data structure, which then allows the data to be queried using JSON notation. Built into the Connector application is the JSON/XML/CSV Query Browser that allows testing and configuration to be performed, which can be very helpful when looking to set up a JSON/XML/CSV - Webservice/File data source type within a Generic adaptor.

If CSV text data is being queried, then it will be first turned into a JSON data structure, which then allows the data to be queried using JSON notation. The data soure will read the first row of the CSV text data to find the names of the column headers, then use these to name the properties of each JSON object retrieved for each subsequent row By also using the JSON/XML/CSV Query Browser it allows testing and configuration to be performed with CSV data, which can be very helpful when looking to set up a JSON/XML/CSV - Webservice/File data source type within a Generic adaptor. Note that only CSV data saved with UTF-8 or ASCII character encoding is supported by the adaptor.

When JSON/XML/CSV data is being written, the Generic adaptor will first build an JSON data structure, then if configured it will convert the JSON into an XML document that can be saved as a file to the filesystem, or else sent across to a web service using the HTTP/HTTPS protocol.

JSON/XML/CSV - Webservice/File Data Source Type Settings

Below are the settings that can be configured for each JSON/XML/CSV - Webservice/File data source type.

| Setting | Description |

|---|---|

| Data Source Label | Label of the data source that allows people to recognise the type of data or location where data is read or written to. |

| Protocol | Sets the protocol that determines if data should be sent and from other web services using the HTTP web protocol, or read and written to files locally. Set to one of the following options:

|

| Host/IP Address |

If the Protocol setting is set to http:// or https:// then set the IP address or website domain where the JSON, XML or CSV data is being read from or written to. Additionally you can specify any root folders that are common to the web service, which will be prepended to each data export/imports URL. Example values:

|

| HTTP Request Headers |

If the Protocol setting is set to either http:// or https:// then set the headers that will be placed in all data export/data import http requests made to the web service to obtain or push data. Common headers include: Content-Type: application/json |

| Data Type |

Specifies the format of data being sent out as in data imports or read in as with data exports from either a web service or file. Set to either:

|

| HTTP Request Records Per Page |

If the Protocol setting is set to either http:// or https:// then when data export is run to request data from a web service, if this setting has a value larger than 1, then it will indicate the amount of JSON, XML or CSV records that are expected to be returned from the web service. If the number of records equals or exceeds the amount of records returned then the adaptor will make another http request to get the next page of data. Many web services have limits on how many records can be returned, when this occurs then set this value, and for each data export place data hooks within the URL or body to ensure that the next page of data is requested, otherwise infinite loops may occur where the same page of data is requested again and again. |

| Connection Testing URL |

If the Protocol setting is set to either http:// or https:// then specify the endpoint URL that can be used to test if a HTTP request can be set to web service configured with the settings above. The URL does not need to be the full URL, since the values in Protocol and Host/IP Address settings will be prepended to this URL. |

| Timeout Requests After Waiting | If the Protocol setting is set to either http:// or https:// then set the number of seconds that the adaptor should wait for receiving a http response from the web service being called for a data export or import before giving up. Choose a number that is not too small or big, based on how slow or fast your internet connection is, and how long the web service data is being sent to or from can expect to take. |

Data Source Type: CSV Spreadsheet File

Within the Generic adaptor data sources can be set up to read data from spreadsheet files that have been saved in the CSV file format. CSV (Comma Separated Values) is a file format that saves its data in a human readable form, that can be viewed within any text editing application such as Notepad, Gedit, as well as spreadsheet applications such as Microsoft Excel. The data in the file is structured in a table form where there contains columns and rows of data, with the first row containing the names of the columns. CSV files can be structured in different ways by using different characters to seperate the columns, rows, and cells of data. When setting up the CSV file data source in the adaptor there are settings that defined the characters that are used to tell how the CSV file data is read in. This allows great flexibility and customisation of reading in CSV files generated from different systems besides spreadsheet applications.

For each data export there is the ability to read in multiple CSV files of data. For example there may be four CSV files that contain product data. The Generic adaptor supports reading in all four of these files for a product data export, as long as the names and data of the files are consistent, eg. products1.csv, products2.csv, products10.csv. Settings exist for each data export to set a regular expression that will be used to match the files on, so based on the example a rule may be written to match files where their name is "product[anynumber].csv". The files themselves do not need to end with the .csv file extension, they could be set as .txt, .file, .dotwhatever. As long as the content in the file is saved in CSV text form then the adaptor will be able to read the data.

CSV files are very useful to use when a business system can output and read in data in the file format, or when using a spreadsheet applicable to manually manage the data. For example an accounting system may not support product flags, so Microsoft Excel spreadsheet may be used instead to manage a list of flags, and another spreadsheet used to manage the products assigned to flags. These spreadsheets can then be saved in the CSV file format that will allow the adaptor to read this data in and export it the an Ecommerce system set up with the Generic adaptor.

CSV Spreadsheet File Data Source Type Settings

Below are the settings that can be configured for each CSV data source type.

| Setting | Description |

|---|---|

| Data Source Label | Label of the data source that allows people to recognise the type of data or location where data is read or written to. |

| CSV Files Folder Location | Set the drive and root directory(s) on the file system where the CSV files are read or written to. The location does not need to specify the full folder location, as it will be prepended to the file path for each data export/import. |

| Data Field Delimiter |

Set the characters within the CSV files being read or written that are used to split each data field for each data row. The comma character is used to separate each data field. |

| Data Row Delimiter |

Set the characters within the CSV files being read or written that are used to split each data row. Example CSV Data: The line feed and newline characters are used to separate each data row. The line feed and newline characters are invisible by default, however can be set by placing r and n values into this setting. |

| Data Field Enclosing Character |

Set the characters within the CSV files being read or written that are used to surround each value set within a data field. This ensures that the same characters used for data field and data row delimiters can be placed within the content of a data field. Example CSV Data: The double quote character is inclosing each data field's value. |

| Data Field Escaping Character |

Set the characters within the CSV files being read or written that are placed infront of Data Field Enclosing Characters to indicate that the data field enclosing characters should not be treated as such. Example CSV Data: The back slash character is escaping the double quote data field enclosing character, ensuring that the double quote character will appear in the data field values. |

Data Source Type: ESD Web Service

Within the Generic adaptor data sources can be set up to read and write data to a web service using the HTTP protocol. This allows data to be retrieved from any location within a in-house computer network, or over the internet. The adaptor specifically supports obtaining data that is formatted in the Ecommerce Standards Documents (ESD) format. The ESD format provides a standard way to structure data that is passed to, and from Ecommerce systems and business systems. The generic adaptor supports reading and writing ESD data from a RESTful webservce that supports the ESD data format. The webservice itself is a piece of computer software that can receive HTTP requests and return HTTP responses, the same technology that allows people to view web pages over the internet.

Using a web service allows the generic adaptor to retrieve and write data in real time, which can be very handy when the web service is connected to a business system where up-to-date information needs to be obtained. An example of this is product stock quantities. The generic adaptor could connect to a webservice, which then connects to a business system and obtains the available stock quantities of products. This stock quantity data can then be returned to the Ecommerce system to show a person the current stock quantity of a product and whether it is available to be purchased or not.

For each data export and data import configured in the Generic adaptor there is the ability to set the URL of the web service that will be used to request data, or export data to the webservice. For the web service data source there are also settings to configure the IP address or domain used to connect to the web service, the protocol, as well as set any custom key value pairs in the HTTP request headers.

In order to develop a web service that allows connections with the Generic adaptor the webservice will need to conform to the ESD data format, as well as be a RESTful web service. More information about the Ecommerce Standards Documents can be found at https://www.squizz.com/esd/index.html

HTTP Ecommerce Standards Documents Data Source Type Settings

Below are the settings that can be configured for each HTTP Ecommerce Standards Document data source type.

| Setting | Description |

|---|---|

| Data Source Label | Label of the data source that allows people to recognise the type of data or location where data is read or written to. |

| Protocol | Sets the protocol that determines if data should be sent securely or insecurely from other web services using the HTTP web protocol . Set to one of the following options:

|

| Host/IP Address |

Set the IP address or website domain where the data is being read from or written to when the adaptor calls data exports and data imports to run. Additionally you can specify any root folders that are common to the web service, which will be prepended to each data export/imports URL. Example values:

|

| HTTP Request Headers |

Set the headers that will be placed in all data export/data import http requests made to the web service to obtain or push data. Common headers include: Content-Type: application/json |

| Connection Testing URL |

Specify the endpoint URL that can be used to test if a HTTP request can be set to web service configured with the settings above. The URL does not need to be the full URL, since the values in Protocol and Host/IP Address settings will be prepended to this URL. |

| Timeout Requests After Waiting | Set the number of seconds that the adaptor should wait for receiving a http response from the web service being called for a data export or import before giving up. Choose a number that is not too small or big, based on how slow or fast your internet connection is, and how long the web service data is being sent to or from can expect to take. |

Data Source Type: MS SQL Server

Within the Generic adaptor data sources can be set to read and write data to a Microsoft (MS) SQL Server database using the ADO.NET database libraries made available through the DOT.NET framework that the Connector software uses. This allows data to be retreived from any location within an in-house computer network, or over the wider internet if allowed. There are many business systems that use a MS SQL Server to store the system's data, and the MS SQL Server data source type within the Generic adaptor can be used to retrieve and write data for such business systems.

For the Generic adaptor's MS SQL Server data source type, each of the data exports and imports are configured to use the SQL (Structured Query Language). SQL queries can be setup to query and read data from tables within the database, as well as write data into specified tables. This makes it easy for a data specialist who understands the database table structure of a business system, to create SQL queries which can obtain data the from business system and push it back into an Ecommerce System,

For the adaptor to write data back into an MS SQL Server database (such as for sales orders) it is recommended to create placeholder database tables that the Generic adaptor is configured to write data into, then a 3rd party piece of software is developed that can interpret the data and move it to all the database tables that its business system requires.

MS SQL Server Data Source Type Settings

Below are the settings that can be configured for each MS SQL Server data source type.

| Setting | Description |

|---|---|

| Data Source Label | Set the label of the data source that allows people to recognise the type of data or location where data is read or written to. |

| MS SQL Server Data Source Location |

Set the computer name or IP address that is running the MS SQL Server instance as well as the SQL Server name. Examples:

|

| MS SQL Server Data Source Name |

Set the name of the database or catalogue that exists within the MS SQL Server instance. |

| Data Source User Name |

Set the name of the user that is used to access the SQL Server instance. Ensure that the user is set up and has permissions to access the specified database/catalogue. |

| Data Soure User Password |

Set the password of the user that is used to access the SQL Server instance. Ensure that the user is set up and has permissions to access the specified database/catalogue. |

| Connection Testing SQL Query |

Set an example SQL query that is used to test that a connection can be made to the configured SQL Server instance, and can successfully return data. Avoid setting a SQL query that returns lots of data. Set an SQL query such as: SELECT 1 |

| Write Data Using Transactions | If set to Yes then when the adaptor's data imports are configured to import data into the SQL Server instance, if multiple record inserts occur, then only if all successfully execute will all the data be written to the database in a single transaction. If set to No and one of multiple database inserts successfully executes, then the data will remain in the database and could exist in a partially completed state. |

| Write Data Using Prepared Statements | If set to Yes them when the adaptor's data imports are configured to import data into a SQL Server instance, each data field will be added to an insert query using prepared statements. This ensures that the values being inserted cannot break the syntax of the SQL query, however in the Connector logs it means that you cannot see the values being placed within SQL insert queries. Its best to leave this setting set to Yes unless you are initially setting up the adaptor, or else need to diagnose insert issues. |

Data Source Type: ODBC (Compatible Databases)

Within the Generic adaptor data sources can be set to read and write data to a database using the ODBC (Open DataBase Connectivity) protocol. ODBC is an older, and widely used protocol which is supported by many different database technologies, such as MySQL, PostgreSQL, Microsoft Access, among many others. It can be used allow data to be retreived from any database location within an in-house computer network, or over the wider internet if allowed. There are many business systems that use ODBC enabled databases to store the system's data, and the ODBC data source type within the Generic adaptor can be used to retrieve and write data for such business system databases.

For the Generic adaptor's ODBC data source type, each of the data exports and imports are configured to use the SQL (Structured Query Language). SQL queries can be setup to query and read data from tables within the database, as well as write data into specified tables. This makes it easy for a data specialist who understands the database table structure of a business system, to create SQL queries which can obtain data the from business system and push it back into an Ecommerce System,

For the adaptor to write data back into an ODBC enabled database (such as for sales orders) it is recommended to create placeholder database tables that the Generic adaptor is configured to write data into, then a 3rd party piece of software is developed that can interpret the data and move it to all the database tables that its business system requires.

ODBC Data Source Type Settings

Below are the settings that can be configured for each ODBC data source type.

| Setting | Description |

|---|---|

| Data Source Label | Set the label of the data source that allows people to recognise the type of data or location where data is read or written to. |

| ODBC Data Source Name |

Set the name of the Data Source Name (DSN) set up within the Windows ODBC 32bit Data Sources application. This determines the database that will be accessed, the Windows user who will access it and the ODBC driver used to access it. |

| ODBC User Name |

Set the name of the user that is used to access the database being connected to through ODBC. Ensure that the user is set up and has permissions to access the specified database. |

| ODBC User Password |

Set the name of the user that is used to access the database being connected to through a ODBC connection. Ensure that the user is set up and has permissions to access the specified database. |

| Connection Testing SQL Query |

Set an example SQL query that is used to test that a connection can be made to the configured database using a ODBC connection. Avoid setting a SQL query that returns lots of data. Set an SQL query such as: SELECT 1 |

| Write Data Using Transactions | If set to Yes then when the adaptor's data imports are configured to import data into the database instance, if multiple record inserts occur, then only if all successfully execute will all the data be written to the database in a single transaction. If set to No and one of multiple database inserts successfully executes, then the data will remain in the database and could exist in a partially completed state. Only set to Yes if the ODBC driver being used to connect to the database supports transactions. |

| Write Data Using Prepared Statements | If set to Yes them when the adaptor's data imports are configured to import data into a database instance, each data field will be added to an insert query using prepared statements. This ensures that the values being inserted cannot break the syntax of the SQL query, however in the Connector logs it means that you cannot see the values being placed within SQL insert queries. Set this value to Yes only if the ODBC driver being used supports transactions and using placeholders in SQL queries. |

Data Source Type: SQLite Database

Within the Generic adaptor data sources can be set to read and write data to a SQLite database file. SQLite databases are relational database management systems that can be embedded in single files, and be easily distributed or transferred. SQLite is one of the most popular databasing technologies, and is heavily used for storing data on mobile devices, such as iOS, Android, and other operating systems.

For the Generic adaptor's SQLite data source type, each of the data exports and imports are configured to use the SQL (Structured Query Language). SQL queries can be setup to query and read data from tables within the database, as well as write data into specified tables. This makes it easy for a data specialist who understands the database table structure to create SQL queries which can obtain data the from SQLite database and push it back into an Ecommerce System,

Note that SQLite databases support multiple threads reading a database at the same time, but for writing data to a table in the database it may place locks on the database. The Generic adaptor uses the System.Data.SQLite driver to read and write data from a SQLite version 3 database file. Visit the driver's website to find out more information about the SQL functions and database operations that are supported.

SQLite Data Source Type Settings

Below are the settings that can be configured for each SQLite data source type.

| Setting | Description |

|---|---|

| Data Source Label | Set the label of the data source that allows people to recognise the type of data or location where data is read or written to. |

| SQLite Database File Path |

Set the full file path to the SQLite database file (version 3) that is stored on the file system. If the file is stored on a network drive, then ensure that a UNC computer name is used in the path instead of a mapped network drive. Mapped network drives normally cannot be seen by the Connector's Host Windows Service that runs as a system Windows user. |

| Connection Testing SQL Query |

Set an example SQL query that is used to test that a connection can be made to the configured SQLite database file. Avoid setting a SQL query that returns lots of data. Set an SQL query such as: SELECT 1 |

| Write Data Using Transactions | If set to Yes then when the adaptor's data imports are configured to import data into the SQLite database file, if multiple record inserts occur, then only if all successfully execute will all the data be written to the database in a single transaction. If set to No and one of multiple database inserts successfully executes, then the data will remain in the database and could exist in a partially completed state. |

| Write Data Using Prepared Statements | If set to Yes them when the adaptor's data imports are configured to import data into a SQLite database file, each data field will be added to an insert query using prepared statements. This ensures that the values being inserted cannot break the syntax of the SQL query, however in the Connector logs it means that you cannot see the values being placed within SQL insert queries. Typically only set to No when first configuring the adaptor or diagnosing data import issues. |

Data Export Types

The Generic adaptor supports exporting data from the following types of data sources, based on the Data Source Type assigned to each the adaptor's data exports .

Spreadsheet CSV Data Exports

For CSV spreadsheet data sources each data export needs to be configured to map the column in the spreadsheet file to the Ecommerce Standards Document record field. Some data exports will have their data joined together when the data is converted in to the standards objects, and exported to the relevant Ecommerce system. Each data export can be configured to read from one or more CSV files. For each data export by placing the wildcard asterisk character in the "CSV Text File Name Matcher" filename field, it allows multiple files to be read that contain the same prefix or suffix, eg. myfile*.csv will match files named myfile_1.csv and myfile_2.csv. The exports can be configured to read CSV data from any file extension, not just CSV files. The data source also supports reading in files that use different character delimiters to separate data, such as tabs, spaces. There are data source settings where you get to define the characters that delimit field data, as well as rows. Additionally there are settings that allow you to specify the characters that can escape a character that would normally be used to delimit data, or rows.

SQL Database Based Data Exports

For ODBC, MS SQL Server, and SQLite database data sources each data export needs to be configured to read data from a specified database table (or joined tables, or view(s)), and map the fields in the table(s)/views to the Ecommerce Standards Document record fields. Some data exports with have their data joined together when the data is converted in to the standards objects, and exported to the relevant Ecommerce system.

Ecommerce Standards Documents Webservice Data Exports

For the ESD webservice data sources the exports are configured to send a HTTP get request to a compatible webservice which will return data in the native ESD serialized JSON format, which the connector will simply forward on the relevant Ecommerce system. Some of the data exports listed below are not required to be configured to an ESD data source since the data export's settings are not required to obtain the relevant data.

JSON/XML/CSV - Webservice/File Data Exports

For the JSON/XML/CSV webservice or file based data exports they first need a data source type to be set up so that the adaptor knows whether to make a HTTP request to retreive the JSON, XML or CSV data from a computer network or internet location, or otherwise from a file stored on the file system. This is done by setting to to Protocol of the data source to either "http://", "https://", or "file:///". For HTTP connections the domain and header information will need to be set to allow connections to be made to a webservice. For each data export you can then set the relative URL path to call the most appropriate endpoint that will be used to return data. When setting up the data source you will need to specify if XML, CSV or JSON data should be expected to be returned from the web service or locally found file. For some webservices they may place limitations on how many records may be returned in one request. When this occurs then data source type has a setting that can specify the maximum number of records "per page" that will be returned on one request, and the adaptor can make subsequent requests to the same web service endpoint until all data has been obtained.

IF XML or CSV data has been returned from either the web service or local file then it will first be converted into JSON data. After the JSON data has been successfully processed, then for each data export the "JSON Record Set Path" is used to locate the objects within the JSON data structure that represent records. The JSON Record Set Path needs to be set using a JSONPath formatted string. Using JSONPath you can place conditions on which objects that are returned. For example dataRecords[*] would return all objects found within the array assigned to the dataRecords key in JSON data. Another example Items[*].SellingPrices[?(@.QuantityOver <= 0)] would find price records within the Selling Prices array that have the attribute "QuantityOver" with a value less than or equal to 0. The Selling Prices array would need to be a child of objects stored in the Items array. For CSV files the path will need to be set to dataRecords[*]. Multiple paths can be set by placing the --UNION-- text between each path. When this occurs each path between the --UNION-- delimiter will be called sequentially to read through the JSON/XML/CSV data, then its found rows will be appended to previous records obtained. This works similar to the UNION clause in the SQL database language.

Once the adaptor has obtained a list of records from the JSON data, it will then iterate through each record and and allow data to be retreived from each JSON record object. Each record object's values can then be read into each data field for the data export. Additionally for each record field there is the ability to place functions that allow traversal through the JSON data structure to obtain the required data, as well as allow the data to be manipulated before being placed in the Ecommerce Standards Document record. Once all JSON record objects have been read then the data (which has been standardised) can then be sent on to the corresponding Ecommerce system that is receive the data.

Data Export Pagination

When a data export is called, multiple requests may need to be called to external web services to return a full set of records to export. For example a web service may only return at maximum 100 products at a time, so additional requests may need to occur to get the next "page" of records (similar to if were turning a page to view the next set of products within a printed catalogue). The Generic adaptor's JSON/XML/CSV web service/file data source type supports making multiple requests for all data exports, also known as "Pagination" to collate a full set of records. Only once a full set of records is collated will the adaptor then export the data in its entirety. Within the JSON/XML/CSV web service/file data source type settings window a setting exists called "HTTP Request Records Per Page". If this is set to a number larger then 0 then it tells the adaptor how much records it expects to receive per page when running any data exports assigned to the data source. If the number of records retrieved is greater than the number specified within the setting then the adaptor will make an additional request to get the next page of data.

Example

In the example below 2 requests must be made to the example.com web service to return all 5 product records that exist. Each request only allows a maximum of 3 records to be returned, and the web service allows a parameter called "page" that controls which page of products is returned. When the Generic adaptor's Products data export is run it can be configured to call the web service many times (2 in this case) until all pages of product data has been obtained.

Page 1 Data. URL http://www,example.com/api/products?page=1

| Record Number | Product Name | Code |

|---|---|---|

| 1 | Tea Towel | TEATOW123 |

| 2 | Cup | CP222 |

| 3 | Tea | TEALIQ |

Page 2 Data, URL http://www,example.com/api/products?page=2

| Record Number | Product Name | Code |

|---|---|---|

| 4 | Hand Soap | HSM247 |

| 5 | Sponge | SPS22322 |

Full Record Set pages of records collated and exported by Generic adaptor's Products data export

| Record Number | Product Name | Code |

|---|---|---|

| 1 | Tea Towel | TEATOW123 |

| 2 | Cup | CP222 |

| 3 | Tea | TEALIQ |

| 4 | Hand Soap | HSM247 |

| 5 | Sponge | SPS22322 |

There are 2 ways in which records are counted for each page of data requested. The first is based on the number of records that are returned using the path set in the JSON RecordSet Path setting configured in the data export. Alternatively if a JSON RecordSet path is set in the "Page Record Count Path" setting for a data export, the adaptor instead will locate the object in the JSON data structure and get the count of the number of children objects assigned to it. This second option allows you to independently get the number of records found in a page of data, separate to the number of records being processed by the data export.

For example if a request is made to a web service to get all the pricing of products and the web service returns product records with pricing included for each product record, then in the "JSON Record Set Path" you may need to set the path to data.products[*].prices[*], however that could return 0 price records for a full page of products that have no assigned pricing data. If the web service is paging on product records and the adaptor is paging on pricing records, then the adaptor's data export may stop trying to request more pages of prices when 1 page of products contains no pricing records. You can mitigate this problem by setting the data export's "Page Record Count Path" setting to data.products to tell the data export to count the number of records that exist under the data.products object, instead of the pricing records being processed.

Within the URL, request headers, request body and record set path settings of each data export in the adaptor you can set the following data hooks below to control how the URL can be changed to get multiple pages of data. Examples:

Example: Paginate By Page Number

http://www,example.com/api/products?page={pageNumber}

Output:

http://www,example.com/api/products?page=1

http://www,example.com/api/products?page=2

http://www,example.com/api/products?page=3

Example: Paginate By Last Record Of Previous Page

http://www,example.com/api/products?starting-product-id={lastKeyRecordIDURIencoded}&recordSize={recordsPerPage}

Output:

http://www,example.com/api/products?starting-product-id=&recordSize=100

http://www,example.com/api/products?starting-product-id=GAB332&recordSize=100

http://www,example.com/api/products?starting-product-id=GFH33211&recordSize=100

Example: Paginate By Record Index

http://www,example.com/api/products?starting-index={pageOffset}&recordSize={recordsPerPage}

Output:

http://www,example.com/api/products?starting-index=0&recordSize=100

http://www,example.com/api/products?starting-index=100&recordSize=100

http://www,example.com/api/products?starting-index=200&recordSize=100

JSON/XML/CSV Data Hooks

Any of the following data hooks may be embedded within the data export "Data Export URL", "JSON RecordSet Path", "HTTP Request Headers", "HTTP Request Body" settings. which will get replaced with values allowing record searches to be set up within JSON/XML/CSV webservice/file requests:

- {recordsPerPage}

Number of records to retrieve for each page of records requested. This number is the same as set within the data export's assigned JSON/XML/CSV data source type. - {pageNumber}

The current page number being processed by the data export. The page number starts from number 1. - {pageIndex}

The current page number being processed by the data export, with the page number offset by -1. Eg. for page 1 this hook would return 0, for page 2 it returns 1. - {pageOffset}

The current page number being processed by the data export multiplied by the amount of records per page. For example if each page was configured in the export's assigned JSON/XML/CSV data source type to contain 100 records, then when the export is called to retrieve the 1st page of data this hooks value would be 0, then 100, then 200 ((page 3 - 1) * 100 records per page). - {pageIdentifier}

Gets the identifier of the page from the JSON path set in the Next Page Identifier Path of the data export. This can be used to specifically obtain a certain identifier in the returned response data, that identifies a value to use in the next request to obtain the next page of data. Initially the value is empty of the first web page request. - {lastKeyRecordID}

Gets the key identifier of the last record that was retrieved by the data export. This is useful if pages of records are paginated not by number, but based on alphabetical order, using the key (unique identifer) of the record as the starting basis to retrieve the next page of records. - {lastKeyRecordIDXMLencoded}

Same value as {lastKeyRecordID}, except that the value has been XML encoded to allow it to be safely embedded within XML or HTML data without breaking the structure of such documents. - {lastKeyRecordIDURIencoded}

Same value as {lastKeyRecordID}, except that the value has been URI encoded to allow it to be safely embedded within URLs (avoiding breaking the syntax of a URL).

Dynamic HTTP Request Generation

When a JSON/XML/CSV Web Service/File data source type is assigned to a data export, within each of the data export settings (Data Export URL, JSON RecordSet Path, Page Record Count Path, HTTP Request Headers, HTTP Request Body, and Data Export Result Matcher), there is the ability to call the JSON/XML/CSV field functions to determine the final value set within each of these fields. To do so set the following text within any of the fields:

==JSON-FUNC-EVAL==

JSON-FUNC-EVAL is short for "JSON Function Evaluate". Any text placed after this keyword will be treated as JSON data, allowing any of the JSON/XML/CSV field functions to be used to determine the request field's value.

For example placing the following text into the HTTP Request Body will allow the HTTP request body to have its content dynamically set:

Example 1:

Setting Value: ==JSON-FUNC-EVAL==CONCAT('timeout=',CALC('5*60*1000'))

Output: timeout=300000

Request JSON Data

Each of the request evaluating functions can utilise the following request JSON object data:

{

"nonce": 4859b7f7f7534f2087cec23d977fe299,

"datetimenow_utc_unix": 1556520888402,

"request_url": "https://example.com/get/data/from/webservice?param1=a¶m2=b",

"request_http_method": "GET"

}

| Request JSON Data | Description |

|---|---|

| nonce | Returns a random generated value, based on a Universal Unique Identifier (UUID). The value generated at the time a request is made is guaranteed to be unique compared to values generated at any other time. |

| datetimenow_utc_unix | Returns the current date time at the request is made. the date time is a 64bit long integer storing the amount of milliseconds since the 01/01/1970 UTC epoch. This value can be converted into human readable date time format using the DATESTR_CONVERT function. |

| request_url | Contains the fully evaluated request URL that will be used to call the data source to get or push data to. The URL is composed of the data source's protocol, Host/IP address, and the data exports/imports URL. |

| request_http_method | Contains the HTTP request method used to call the configured data source, based on the HTTP Request Method set within the data export or data import. |

Saving HTTP Response Data To File

When the JSON/XML/CSV Web Serice/File data source type is assigned to a data export, if the data source type is configured to obtain data from a web service using a HTTP or HTTPS request, there is the ability to save the returned data in the HTTP repsonse body to a file before the data is processed. This is useful if multiple data exports need to export out the same data, as well as for testing and diagnostics to check the raw data being returned from a web service.

Within the Generic adaptor's settings window, under the Data Exports tab, when a JSON/XML/CSV Web Service/File data source type is assigned to a data export, within the JSON/XML/CSV Settings tab, a setting labelled "Save HTTP Response Data To Folder" exists. If the setting is not left empty and configured with a full file path (including file name and extension) then when a HTTP request is made to obtain data from a web service, the data returned from the web service's HTTP response body will be saved to a file at the configured file system location and file name. If the file path contains the data hook {pageNumber} then the pageNumber hook will be replaced by the page number of the request made to obtain the data. This allows many pages of data to be separately saved to the file system, useful for when pagination occurs to obtain a full data set from from the web service across multiple requests.

Before the Generic adaptor's data export saves any data to a folder location, it will create a file labelled "Data-Files-Incomplete.lock" in the same location. This ensures that if multiple pages of data a required to obtain a full record set from the web service, that all the page's data files need to be fully saved to the file system. If this doesn't occur, then the lock file will remain in place to denote that the directory contains incomplete data that should not be read. Once all the page's request data has been successfully saved then the lock file will be removed. If this lock file is in place when other data exports try to read from the same directory then an error will occur and the data exports will fail. This ensures that any linked data exports to the same data gracefully fail and don't export incomplete data. The check for the lock file can be ignored by placing the parameter "?ignore-data-files-incomplete-lock" at the end of the file path. When this occurs the adaptor will continue to look for files regardless of whether a lock file exists or not.

Data Exports

The Generic adaptor has the following data exports listed in the table below that can be configured to read data from one of its chosen ODBC database, MS SQL Server database, CSV spreadsheet file(s), ESD supported web service or JSON/XML/CSV based data sources. The obtained data is then converted into a Ecommerce Standard Document, and exported from the adaptor into the configured Ecommerce system or Connector Data Set database for importing.

Data Export Definitions

| Data Export | Description |

|---|---|

| Adaptor Status | Returns the status of the adaptor's connection to its data source. |

| Attribute Profile Attributes |

Obtains a list of attributes assigned to an attribute profile. This data is mapped to the ESDRecordAttribute record when exported. The data export is joined with the Attribute Profile Product Values and Attribute Profiles data exports to construct an ESDocumentAttribute document which is exported out in its final state to the Ecommerce system. For ESD webservice data sources this data export is not used and does not need to be configured. |

| Attribute Profile Product Values | Obtains a list of attribute values assigned to products. This data is mapped to the ESDRecordAttributeValue record when exported. The data export is joined with the Attribute Profile Attributes and Attribute Profiles data exports to construct an ESDocumentAttribute document which is exported out in its final state to the Ecommerce system.

For all data source types this data export must be configured to allow attribute data to be exported. |

| Attribute Profiles |

Obtains a list of attribute profiles. This data is mapped to the ESDRecordAttributeProfile record when exported. The data is joined with the Attribute Profile Attributes and Attribute Profile Product Values data exports to construct a ESDocumentAttribute document which is exported out in its final state to the Ecommerce system. For ESD webservice data sources this data export is not used and does not need to be configured. |

| Category Trees |

Obtains a list of category trees. This data is mapped to the ESDRecordCategoryTree record when exported. The data is joined with the Categories data export to construct an ESDocumentCategory document which is exported out in its final state to the Ecommerce system, For ESD webservice data sources this data export is not used and does not need to be configured. |

| Categories |

Obtains a list of categories. This data is mapped to the ESDRecordCategory record when exported. The data is joined with the Category Products data export to construct an ESDocumentCategory document which is exported out in its final state to the Ecommerce system, For all data source types this data export must be configured to allow category data to be exported. |

| Category Products | Obtains a list of mappings that assign products to categories. This data is placed within an ESDRecordCategory record when exported. The data is joined with the Categories data export to construct an ESDocumentCategory document which is exported out in its final state to the Ecommerce system,

For ESD webservice data sources this data export is not used and does not need to be configured. |

| Currency Exchange Rates | Obtains a list of currency exchange rates. Each currency exchange rate record contains the rate that determines how much it would cost to buy one currency with another. The data is placed within an ESDRecordCurrencyExchangeRate record when exported. The records are then all placed into a ESDocumentCurrencyExchangeRate document which is exported out in its final state to the Ecommerce system. |

| Combination Product Parents |

Obtains a list of products that are set as the parent product of an assigned combination. Each product is assigned to a combination profile. The data is placed within an ESDRecordProductCombinationParent record when exported. The data is joined with the Combination Products, Combination Profile Field Values, Combination Profile Fields, and Combination Profiles data exports to construct an ESDocumentProductCombination document which is exported out in its final state to the Ecommerce system. For all data source types this data export must be configured to allow product combination data to be exported. |

| Combination Products | Obtains a list of mappings that allow a product to be assigned to a parent product for a given field and value in a combination. The data is placed within an ESDRecordProductCombination record when exported. The data is joined with the Combination Product Parents, Combination Profile Field Values, Combination Profile Fields, and Combination Profiles data exports to construct an ESDocumentProductCombination document which is exported out in its final state to the Ecommerce system.

For ESD webservice data sources this data export is not used and does not need to be configured. |

| Combination Profile Field Values | Obtains a list values that are associated to fields in a combination profile. The data is placed within an ESDRecordCombinationProfileField record when exported. The data is joined with the Combination Product Parents, Combination Products, Combination Profile Fields, and Combination Profiles data exports to construct an ESDocumentProductCombination document which is exported out in its final state to the Ecommerce system.

For ESD webservice data sources this data export is not used and does not need to be configured. |

| Combination Profile Fields | Obtains a list of fields that are associated to a combination profile. The data is placed within an ESDRecordCombinationProfileField record when exported. The data is joined with the Combination Product Parents, Combination Products, Combination Profile Field Values, and Combination Profiles data exports to construct an ESDocumentProductCombination document which is exported out in its final state to the Ecommerce system.

For ESD webservice data sources this data export is not used and does not need to be configured. |

| Combination Profiles | Obtains a list of combination profiles. The data is placed within an ESDRecordCombinationProfile record when exported. The data is joined with the Combination Product Parents, Combination Products, Combination Profile Field Values, and Combination Profile Fields data exports to construct an ESDocumentProductCombination document which is exported out in its final state to the Ecommerce system.

For ESD webservice data sources this data export is not used and does not need to be configured. |

| Contract Customer Accounts |

Obtains a list mappings between customer accounts and contracts. The data is placed within an ESDRecordContract record when exported. The data is joined with the Contract Customer Products and Contracts data exports to construct an ESDocumentCustomerAccountContract document which is exported out in its final state to the Ecommerce system. For ESD webservice data sources this data export is not used and does not need to be configured. |

| Contract Products |

Obtains a list mappings between products and contracts. The data is placed within an ESDRecordContract record when exported. The data is joined with the Contract Customer Accounts and Contracts data exports to construct a ESDocumentCustomerAccountContract document which is exported out in its final state to the Ecommerce system. For ESD webservice data sources this data export is not used and does not need to be configured. |

| Contracts |

Obtains a list of contracts. The data is placed within an ESDRecordContract record when exported. The data is joined with the Contract Customer Accounts and Contract Products data exports to construct an ESDocumentCustomerAccountContract document which is exported out in its final state to the Ecommerce system. For all data source types this data export must be configured to allow product combination data to be exported. |

| Customer Account Addresses | Obtains a list of addresses assigned to customer accounts. The data is placed within a ESDRecordCustomerAccountAddress record when exported. The record data is all placed into a ESDocumentCustomerAccountAddress document which is exported out in its final state to the Ecommerce system. |

| Customer Account Payment Types | Obtains a list of payment types assigned to specific customer accounts. The data is placed within an ESDRecordPaymentType record when exported. The data is joined with the Customer Accounts data export to construct an ESDocumentCustomerAccount document which is exported out in its final state to the Ecommerce system. |

| Customer Accounts | Obtains a list of customer accounts. The data is placed within a ESDRecordCustomerAccount record when exported. The record data is joined with the Customer Account Payment Types data export to construct a ESDocumentCustomerAccount document which is exported out in its final state to the Ecommerce system. For all data source types this data export must be configured to allow customer account data to be exported. |

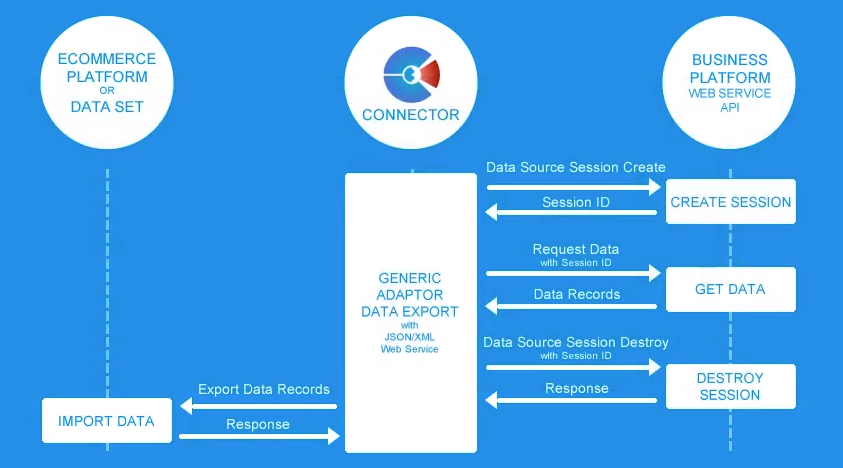

| Data Source Session Create | If assigned to JSON/XML/CSV Service/File data source type then this data export will be called to attempt to login and create a new session with the data source's Web Service. If successful then a session ID returned by the web service can then be passed on an used with subsequent calls the web service in other data exports. This data export will get called any time any other data export is assigned to a JSON/XML/CSV Service File data source type, and only if a session ID is able to be obtained will the other data export get called to run. |

| Data Source Session Destroy | If assigned to JSON/XML/CSV Service/File data source type then this data export will be called to attempt to logout and destroy and existing session with the data source's Web Service, based on the Data Source Session Create data export being called. This data export will get called any time any other data export is assigned to a JSON/XML/CSV Service File data source type, and only if a session ID is able to be obtained in a previous Data Source Session Create data export call. It is highly advisable to configure this data export if the Data Source Session Create data export is configured. This ensures that sessions are correctly destroyed and do not live longer than the absolute minimum amount of time to avoid 3rd party software from gaining access to sensitive information. |

| Flagged Products | Obtains a list of mappings between products and flags. The data is placed within a ESDRecordFlagMapping record when exported. The data is joined with the Flags data exports to construct a ESDocumentFlag document which is exported out in its final state to the Ecommerce system.

For all data source types this data export must be configured to allow product combination data to be exported. |

| Flags | Obtains a list of flags. The data is placed within a ESDRecordFlag record when exported. The data is joined with the Flags data exports to construct a ESDocumentFlag document which is exported out in its final state to the Ecommerce system.

For ESD webservice data sources this data export is not used and does not need to be configured. |

| General Ledger Accounts | Obtains a list of General Ledger Accounts. The data is placed within a ESDRecordGeneralLedgerAccount record when exported. The records are then all placed into a ESDocumentGeneralLedgerAccount document which is exported out in its final state to the Ecommerce system.

|

| Item Group Products | Obtains a list of item groups assigned to specific products. The data is placed within an ESDRecordItemGroup record when exported. The data is joined with the Item Groups data export to construct an ESDocumentItemGroup document which is exported out in its final state to the Ecommerce system. |

| Item Groups |

Obtains a list of item groups. The data is placed within an ESDRecordItemGroup record when exported. The data is joined with the Item Group Products data export to construct an ESDocumentItemGroup document which is exported out in its final state to the Ecommerce system. For all data source types this data export must be configured to allow item group data to be exported. |

| Item Relations | Obtains a list of item relations, that allow one product to be linked to another product. The record data is placed within an ESDRecordItemRelation record when exported. The records are then all placed into a ESDocumentItemRelation document which is exported out in its final state to the Ecommerce system. |

| Location Customer Accounts | Obtains a list of mappings between customer accounts and locations. The data is placed within a ESDRecordCustomerAccountrecord when exported. The data is joined with the Customer Accounts data exports to construct a ESDocumentCustomerAccount document which is exported out in its final state to the Ecommerce system.

For ESD webservice data sources this data export is not used and does not need to be configured. |

| Location Products | Obtains a list of mappings between products and locations, with each product defining the stock quantity available at each location. The data is placed within an ESDRecordStockQuantity record when exported. The data is joined with the Locations, and Location Customer Accounts data exports to construct an ESDocumentLocation document which is exported out in its final state to the Ecommerce system.

For ESD webservice data sources this data export is not used and does not need to be configured. |

| Locations | Obtains a list of locations. The data is placed within an ESDRecordLocation record when exported. The data is joined with the Location Products, and Location Customer Accounts data exports to construct an ESDocumentLocation document which is exported out in its final state to the Ecommerce system.

For all data source types this data export must be configured to allow location data to be exported. |

| Makers | Obtains a list of makers, also known as manufacturers who create products and models. The record data is all placed into a ESDocumentCustomerMaker document which is exported out in its final state to the Ecommerce system. |

| Maker Models | Obtains a list of maker models, these models are created by makers/manufacturers and may be made up of several products/parts. The data is placed within an ESDRecordMakerModel record when exported. The data is joined with the Maker Model Attributes data export to construct an ESDocumentMakerModel document which is exported out in its final state to the Ecommerce system.

For all data source types this data export must be configured to allow maker model data to be exported. |

| Maker Model Attributes | Obtains a list of attributes for maker models. These attributes allow any kind of data to be set against model records. The data is placed within an ESDRecordAttributeValue record when exported. The data is joined with the Maker Model data exports to construct an ESDocumentMakerModel document which is exported out in its final state to the Ecommerce system. |

| Maker Model Mappings | Obtains a list of maker model mappings, that allow products/parts to be assigned to maker models for given categories. The data is placed within an ESDRecordMakerModelMapping record when exported. The data is joined with the Maker Model Mapping Attributes data export to construct an ESDocumentMakerModelMapping document which is exported out in its final state to the Ecommerce system.

For all data source types this data export must be configured to allow maker model mapping data to be exported. |

| Maker Model Mapping Attributes | Obtains a list of attributes for maker model mappings. These attributes allow any kind of data to be set against model mapping records. The data is placed within an ESDRecordAttributeValue record when exported. The data is joined with the Maker Model Mapping data exports to construct an ESDocumentMakerModelMapping document which is exported out in its final state to the Ecommerce system. |

| Payment Types | Obtains a list of payment types. The record data is placed within an ESDRecordPaymentType record when exported. The records are then all placed into a ESDocumentPaymentType document which is exported out in its final state to the Ecommerce system. |

| Price Levels | Obtains a list of price levels. The data is placed within an ESDPriceLevel record when exported. The record data is all placed into an ESDocumentPriceLevel document which is exported out in its final state to the Ecommerce system. |

| Product Alternate Codes | Obtains a list of alternate codes assigned to products. The data is placed within an ESDRecordAlternateCode record when exported. The record data is all placed into an ESDocumentAlternateCode document which is exported out in its final state to the Ecommerce system. |

| Product Attachments | Obtains a list of attachment files assigned to products. The data is placed within an ESDRecordAttachment record when exported. The adaptor will locate the file set in the record, and if found will upload the file to the Ecommerce system. |

| Product Customer Account Pricing | Obtains a list of product prices assigned to specific customer accounts or pricing groups. The data is placed within an ESDRecordPrice record when exported. The data is joined with the Product Customer Account Pricing Groups data export to construct an ESDocumentPrice document which is exported out in its final state to the Ecommerce system. |

| Product Customer Account Pricing Groups | Obtains a list of mappings between customer accounts and pricing groups. The data is collated and joined with the Product Customer Account Pricing data export to construct an ESDocumentPrice document which is exported out in its final state to the Ecommerce system. |

| Product Images | Obtains a list of image files assigned to products. The data is placed within an ESDRecordImage record when exported. The adaptor will locate the file set in the record, and if found check that it is an image file, perform any required resizing, then upload the file to the Ecommerce system. |

| Product Kits | Obtains a list of component products assigned to kits. The data is placed within an ESDRecordKitComponent record when exported. The record data is all placed into an ESDocumentKit document which is exported out in its final state to the Ecommerce system. |

| Product Pricing | Obtains a list of price-level prices assigned to products. The data is placed within an ESDRecordPrice record when exported. The record data is all placed into an ESDocumentPrice document which is exported out in its final state to the Ecommerce system. |

| Product Quantity Pricing | Obtains a list of price-level quantity based prices assigned to products. The data is placed within an ESDRecordPrice record when exported. The record data is all placed into an ESDocumentPrice document which is exported out in its final state to the Ecommerce system. |

| Product Sell Units | Obtains a list of sell units assigned to specific products. The data is placed within an ESDRecordSellUnit record when exported. The data is joined with the Products data export to construct an ESDocumentProduct document which is exported out in its final state to the Ecommerce system. |

| Product Stock Quantities |

Obtains a list of stock quantites associated to one, or a list of products. The data is placed within an ESDRecordStockQuantity record when exported. The record data is all placed into an ESDocumentStockQuantity document which is exported out in its final state to the Ecommerce system. Data Hooks:

|

| Products | Obtains a list of products. The data is placed within an ESDRecordProduct record when exported. The product record data is joined with the Product Sell Units data export and together all placed into an ESDocumentProduct document which is exported out in its final state to the Ecommerce system. |

| Purchasers | Obtains a list of purchasers. The data is placed within an ESDRecordPurchaser record when exported. The records are then all placed into a ESDocumentPurchaser document which is exported out in its final state to the Ecommerce system. |

| Sale Orders | Obtains a list of sales orders. The data is placed within an ESDRecordOrderSale record when combined into a list. The list of sales order records is used to call the Sales Order export to retrieve the full details for each sales order record found. |

| Sales Order |

Obtains the details of a single sales order record. The data is placed within an ESDRecordOrderSale record when combined into a list. The records are then all placed into a ESDocumentOrderSale document which is exported out in its final state to the Ecommerce system. Data Hooks:

|

| Sales Order Lines | Obtain a list of lines for a single sales order obtained from the Sales Order data export. The line data is placed within an ESDRecordOrderSaleLine record and combined into a list of line records. The line records are then all placed into a ESDocumentOrderSale document for the sales order record obtained in the Sales Order data export.

Data Hooks:

|

| Sales Order Line Taxes | Obtain a list of taxes for each sales order line obtained from the Sales Order Lines data export. The line tax data is placed within an ESDRecordOrderLineTax record and combined into a list of line tax records. The line tax records are then all placed into the ESDRecordOrderSaleLine record for each line record found in the Sales Order Lines data export.

Data Hooks:

|

| Sales Order Line Attribute Profiles |

Obtain a list of attribute profiles for each sales order line obtained from the Sales Order Lines data export. The line attribute profile data is placed within an ESDRecordOrderLineAttributeProfile record and combined into a list of line attribute profile records. The line's attribute profile records are then all placed into the ESDRecordOrderSaleLine record for each line record found in the Sales Order Lines data export. Data Hooks:

|

| Sales Order Line Attributes |

Obtain a list of attribute for each sales order line's attribute profile obtained from the Sales Order Line Attribute Profiles data export. The line attribute data is placed within an ESDRecordOrderLineAttribute record and combined into a list of line attribute records. The line's attribute records are then all placed into the ESDRecordOrderLineAttributeProfile record for each attribute profile record found in the Sales Order Attribute Profiles data export. Data Hooks:

|

| Sales Order Payments |

Obtain a list of payments for a single sales order obtained from the Sales Order data export. The line data is placed within an ESDRecordOrderPayment record and combined into a list of payment records. The payment records are then all placed into a ESDocumentOrderSale document for the sales order record obtained in the Sales Order data export. Data Hooks:

|

| Sales Order Surcharges | Obtain a list of surcharges for a single sales order obtained from the Sales Order data export. The surcharge data is placed within an ESDRecordOrderSurcharge record and combined into a list of surcharge records. The surcharge records are then all placed into a ESDocumentOrderSale document for the sales order record obtained in the Sales Order data export.

Data Hooks:

|

| Sales Order Surcharge Taxes |

Obtain a list of taxes for each sales order surcharge obtained from the Sales Order Surcharges data export. The surcharge tax data is placed within an ESDRecordOrderLineTax record and combined into a list of surcharge tax records. The surcharge tax records are then all placed into the ESDRecordOrderSurcharge record for each surcharge record found in the Sales Order Surcharges data export. Data Hooks:

|

| Sales Representatives | Obtains a list of sales representatives. The data is placed within an ESDRecordSalesRep record when exported. The record data is all placed into an ESDocumentSalesRep document which is exported out in its final state to the Ecommerce system. |

| Sell Units | Obtains a list of sell units. The data is placed within an ESDRecordSellUnit record when exported. The records are then all placed into a ESDocumentSellUnit document which is exported out in its final state to the Ecommerce system. |

| Supplier Accounts | Obtains a list of supplier accounts. The data is placed within a ESDRecordSupplierAccount record when exported. The record data is all placed into a ESDocumentSupplierAccount document which is exported out in its final state to the Ecommerce system. |

| Supplier Account Addresses | Obtains a list of addresses assigned to supplier accounts. The data is placed within a ESDRecordSupplierAccountAddress record when exported. The record data is all placed into a ESDocumentSupplierAccountAddress document which is exported out in its final state to the Ecommerce system. |

| Surcharges | Obtains a list of surcharges. The data is placed within an ESDRecordSurcharge record when exported. The records are then all placed into a ESDocumentSurcharge document which is exported out in its final state to the Ecommerce system. |

| Taxcodes | Obtains a list of taxcodes. The data is placed within an ESDRecordTaxcode record when exported. The records are then all placed into a ESDocumentTaxcode document which is exported out in its final state to the Ecommerce system. |

Data Exports - Account Enquiry

The Generic adaptor has the following data exports listed in the table below that can only be called upon to retrieve records from external systems to the Connector. These exports allow for real time querying of records linked to customer or supplier accounts. They can be used to allow customers/staff/other people or software to search for and enquire about records in real time, such as looking up invoices or payments. The obtained data is converted into a Ecommerce Standard Document, and returned from the adaptor to the software calling the Connector for it to display or use the retrieved data.

JSON/XML/CSV Data Hooks

Any of the following data hooks may be embedded within any of the JSON/XML settings for all account enquiry based data exports list below, the data exports are assigned to a JSON/XML/CSV Web Service/File data source type. These hooks will get replaced with values allowing record searches to be set up within JSON/XML/CSV webservice/file requests:

- {recordsPerPage}

Number of records to retrieve for each page of records requested. This number is the same as set within the data export's assigned JSON/XML/CSV data source type. - {pageNumber}

The current page number being processed by the data export. The page number starts from number 1. - {pageIndex}

The current page number being processed by the data export, with the page number offset by -1. Eg. for page 1 this hook would return 0, for page 2 it returns 1.

{pageOffset} - The current page number being processed by the data export multiplied by the amount of records per page. For example if each page was configured in the export's assigned JSON/XML/CSV data source type to contain 100 records, then when the export is called to retrieve the 1st page of data this hooks value would be 0, then 100, then 200 ((page 3 - 1) * 100 records per page).

- {lastKeyRecordID}

Gets the key identifier of the last record that was retrieved by the data export. This is useful if pages of records are paginated not by number, but based on alphabetical order, using the key (unique identifer) of the record as the starting basis to retrieve the next page of records. - {lastKeyRecordIDXMLencoded}

Same value as {lastKeyRecordID}, except that the value has been XML encoded to allow it to be safely embedded within XML or HTML data without breaking the structure of such documents. - {lastKeyRecordIDURIencoded}

Same value as {lastKeyRecordID}, except that the value has been URI encoded to allow it to be safely embedded within URLs (avoiding breaking the syntax of a URL).

Request Data Hooks

The following JSON/XML/CSV data hooks can be configured to display values as configured from within the data export field mappings. This hooks can be embedded within any of the JSON/XML/CSV settings for account enquiry based data exports list below. These hooks allow the HTTP or file based requests to be modified based on the type of request being made to the Connector. For example the {requestFilterClause1} hook may be placed within the Data Export URL, and in its field mapping use an IF function to change the URL based on if a single record needs to be found using a different web service endpoint, compared to an web service endpoint that may be able to find multiple records.

- {requestFilterClause1}

- {requestFilterClause2}

- {requestFilterClause3}

- {requestFilterClause4}

- {requestFilterClause5}

Each of these hooks can reference the following JSON data (as named below) when obtaining records in the original request, as well as use JSON field functions to manipulate the data coming from the data export request.

- keyCustomerAccountID

Unique identifier of the customer account that records are to be obtained for.

keySupplierAccountID

Unique identifier of the supplier account that records are to be obtained for. - recordType

Type of records that are being searched on. eg. INVOICE, ORDER_SALE, BACKORDER, CREDIT, PAYMENT, QUOTE - beginDate1

The earliest date to obtain records from. This value is set as a long integer containing the number of milliseconds since the 01/01/1970 12AM UTC epoch A.K.A. unix time. - endDate1

The soonest date to obtain records from. This value is set as a long integer containing the number of milliseconds since the 01/01/1970 12AM UTC epoch A.K.A. unix time. - pageNumber1

The current page number being processed by the data export. The page number starts from number 1. - numberOfRecords1

Number of records to retrieve for each page of records requested. This number is the same as set within the data export's assigned JSON/XML/CSV data source type. - outstandingRecords1

Either Y or N. If Y then only return records that are outstanding or unpaid. - searchString1

Text data to search for and match records on. The field(s) to search on is dictated by the value set in the search type, or else a default field. - keyRecordIDs1

List of key identifiers of records to match on. The list is a comma delimited list with each KeyRecordID value URI encoded. - searchType1

Type of search to perform to match records on. For example. it could be set to invoiceNumber to search for invoice records that match a given invoice number. - keyRecordID2

Unique key identifier of a single record to retrieve. - dataSourceSessionID

ID of the session retrieved by the Data Source Session data export. Stores the ID of the session that may be required to be able to access and retrieve data from external systems.

The data fields marked with 1 are only available to data exports that perform searches for the details of a record.

The data fields marked with 2 are only available to data exports that retrieve the details of a single record's line.

Data Export Definitions

| Data Export | Description |

|---|---|

| Customer Account Record Back Order Lines |

Obtains a list of lines for a single back order assigned to a customer account. The data is placed within a ESDRecordCustomerAccountEnquiryBackOrderLine record when exported. Each of the back order line records is then placed into a ESDRecordCustomerAccountEnquiryBackOrder record The record data is placed into a ESDocumentCustomerAccountEnquiry document which is exported out in its final state to the Ecommerce system. ODBC/SQL Data HooksAny of the following data hooks may be embedded within the data table data export fields, which will get replaced with values allowing record searches to be set up within SQL queries, based on the connecting Ecommerce system's needs. {recordType} - type of customer account record being searched for. |

| Customer Account Record Back Orders |

Obtains a list of back orders assigned to single customer account, with records found based on matching a number of search factors such as date range, or record ID. The data is placed within a ESDRecordCustomerAccountEnquiryBackOrder record when exported. The record data is all placed into a ESDocumentCustomerAccountEnquiry document which is exported out in its final state to the Ecommerce system. ODBC/SQL Data HooksAny of the following data hooks may be embedded within the data table data export fields, which will get replaced with values allowing record searches to be set up within SQL queries, based on the connecting Ecommerce system's needs. {whereClause} - Contains a number of conditions to control how record searches occur, which matches record dates based on unix timestamp long integer representations of dates. |

| Customer Account Record Credit Lines |

Obtains a list of lines for a single credit assigned to a customer account. The data is placed within a ESDRecordCustomerAccountEnquiryCreditLine record when exported. Each of the credit line records is then placed into a ESDRecordCustomerAccountEnquiryCredit record The record data is placed into a ESDocumentCustomerAccountEnquiry document which is exported out in its final state to the Ecommerce system. ODBC/SQL Data HooksAny of the following data hooks may be embedded within the data table data export fields, which will get replaced with values allowing record searches to be set up within SQL queries, based on the connecting Ecommerce system's needs. {recordType} - type of customer account record being searched for. |

| Customer Account Record Credits |